使用scrapy爬虫的小伙伴可能有不少希望在云服务器上运行自己的爬虫程序,正如ZZKOOK一样,云服务器上设置好定时任务,自己的本地PC就可以关机休息了,任何时间去下载数据就可以了。不过ZZKOOK的云服务器是Centos7,需要在上面安装Scrapy运行环境。本来很简单,但由于模块的版本及齐全程度不同,可能会遇到不少错误,ZZKOOK将本人遇到的问题及解决方法整理汇总如下,供大家参考:

一、基础命令

sudo yum update sudo yum -y install libxslt-devel pyOpenSSL python-lxml python-devel gcc sudo easy_install scrapy

输入上述基础命令后进入scrapy的安装过程。

二、问题集

错误1:ImportError: 'module' object has no attribute 'check_specifier'

在运行easy_install scrapy后出现报错,其traceback信息如下

Traceback (most recent call last):

File "/usr/bin/easy_install", line 9, in <module>

load_entry_point('setuptools==0.9.8', 'console_scripts', 'easy_install')()

File "/usr/lib/python2.7/site-packages/setuptools/command/easy_install.py", line 1992, in main

with_ei_usage(lambda:

File "/usr/lib/python2.7/site-packages/setuptools/command/easy_install.py", line 1979, in with_ei_usage

return f()

File "/usr/lib/python2.7/site-packages/setuptools/command/easy_install.py", line 1996, in <lambda>

distclass=DistributionWithoutHelpCommands, **kw

File "/usr/lib64/python2.7/distutils/core.py", line 152, in setup

dist.run_commands()

File "/usr/lib64/python2.7/distutils/dist.py", line 953, in run_commands

self.run_command(cmd)

File "/usr/lib64/python2.7/distutils/dist.py", line 972, in run_command

cmd_obj.run()

File "/usr/lib/python2.7/site-packages/setuptools/command/easy_install.py", line 380, in run

self.easy_install(spec, not self.no_deps)

File "/usr/lib/python2.7/site-packages/setuptools/command/easy_install.py", line 623, in easy_install

return self.install_item(spec, dist.location, tmpdir, deps)

File "/usr/lib/python2.7/site-packages/setuptools/command/easy_install.py", line 653, in install_item

dists = self.install_eggs(spec, download, tmpdir)

File "/usr/lib/python2.7/site-packages/setuptools/command/easy_install.py", line 849, in install_eggs

return self.build_and_install(setup_script, setup_base)

File "/usr/lib/python2.7/site-packages/setuptools/command/easy_install.py", line 1130, in build_and_install

self.run_setup(setup_script, setup_base, args)

File "/usr/lib/python2.7/site-packages/setuptools/command/easy_install.py", line 1115, in run_setup

run_setup(setup_script, args)

File "/usr/lib/python2.7/site-packages/setuptools/sandbox.py", line 69, in run_setup

lambda: execfile(

File "/usr/lib/python2.7/site-packages/setuptools/sandbox.py", line 120, in run

return func()

File "/usr/lib/python2.7/site-packages/setuptools/sandbox.py", line 71, in <lambda>

{'__file__':setup_script, '__name__':'__main__'}

File "setup.py", line 79, in <module>

File "/usr/lib64/python2.7/distutils/core.py", line 112, in setup

_setup_distribution = dist = klass(attrs)

File "/usr/lib/python2.7/site-packages/setuptools/dist.py", line 269, in __init__

_Distribution.__init__(self,attrs)

File "/usr/lib64/python2.7/distutils/dist.py", line 287, in __init__

self.finalize_options()

File "/usr/lib/python2.7/site-packages/setuptools/dist.py", line 302, in finalize_options

ep.load()(self, ep.name, value)

File "/usr/lib/python2.7/site-packages/pkg_resources/__init__.py", line 2303, in load

return self.resolve()

File "/usr/lib/python2.7/site-packages/pkg_resources/__init__.py", line 2313, in resolve

raise ImportError(str(exc))

ImportError: 'module' object has no attribute 'check_specifier'这是因为setuptools模块太老,可以通过升级解决,具体命令如下:

yum -y install epel-release yum -y install python-pip pip install --upgrade setuptools==30.1.0

错误2:error: Setup script exited with error: command 'gcc' failed with exit status 1

再次运行easy_install scrapy,命令运行时间稍长,但随后出现该错误,具体信息如下:

Package libffi was not found in the pkg-config search path.

Perhaps you should add the directory containing `libffi.pc'

to the PKG_CONFIG_PATH environment variable

No package 'libffi' found

Package libffi was not found in the pkg-config search path.

Perhaps you should add the directory containing `libffi.pc'

to the PKG_CONFIG_PATH environment variable

No package 'libffi' found

Package libffi was not found in the pkg-config search path.

Perhaps you should add the directory containing `libffi.pc'

to the PKG_CONFIG_PATH environment variable

No package 'libffi' found

Package libffi was not found in the pkg-config search path.

Perhaps you should add the directory containing `libffi.pc'

to the PKG_CONFIG_PATH environment variable

No package 'libffi' found

Package libffi was not found in the pkg-config search path.

Perhaps you should add the directory containing `libffi.pc'

to the PKG_CONFIG_PATH environment variable

No package 'libffi' found

c/_cffi_backend.c:15:17: fatal error: ffi.h: No such file or directory

#include <ffi.h>

^

compilation terminated.

error: Setup script exited with error: command 'gcc' failed with exit status 1该错误依然是模块未安装齐全引起的,运行如下命令解决:

yum install gcc libffi-devel python-devel openssl-devel

运行完该命令后,ZZKOOK终于看到scrapy成功安装的信息:Finished processing dependencies for scrapy

错误3.ImportError: No module named _util

既然安装成功了,先跑一个简单的scrapy shell命令吧:

scrapy shell “http://baidu.com"

没料到,居然又报错了:

Traceback (most recent call last):

File "/usr/bin/scrapy", line 11, in <module>

load_entry_point('Scrapy==1.6.0', 'console_scripts', 'scrapy')()

File "/usr/lib/python2.7/site-packages/Scrapy-1.6.0-py2.7.egg/scrapy/cmdline.py", line 150, in execute

_run_print_help(parser, _run_command, cmd, args, opts)

File "/usr/lib/python2.7/site-packages/Scrapy-1.6.0-py2.7.egg/scrapy/cmdline.py", line 90, in _run_print_help

func(*a, **kw)

File "/usr/lib/python2.7/site-packages/Scrapy-1.6.0-py2.7.egg/scrapy/cmdline.py", line 157, in _run_command

cmd.run(args, opts)

File "/usr/lib/python2.7/site-packages/Scrapy-1.6.0-py2.7.egg/scrapy/commands/shell.py", line 66, in run

crawler = self.crawler_process._create_crawler(spidercls)

File "/usr/lib/python2.7/site-packages/Scrapy-1.6.0-py2.7.egg/scrapy/crawler.py", line 205, in _create_crawler

return Crawler(spidercls, self.settings)

File "/usr/lib/python2.7/site-packages/Scrapy-1.6.0-py2.7.egg/scrapy/crawler.py", line 55, in __init__

self.extensions = ExtensionManager.from_crawler(self)

File "/usr/lib/python2.7/site-packages/Scrapy-1.6.0-py2.7.egg/scrapy/middleware.py", line 53, in from_crawler

return cls.from_settings(crawler.settings, crawler)

File "/usr/lib/python2.7/site-packages/Scrapy-1.6.0-py2.7.egg/scrapy/middleware.py", line 34, in from_settings

mwcls = load_object(clspath)

File "/usr/lib/python2.7/site-packages/Scrapy-1.6.0-py2.7.egg/scrapy/utils/misc.py", line 44, in load_object

mod = import_module(module)

File "/usr/lib64/python2.7/importlib/__init__.py", line 37, in import_module

__import__(name)

File "/usr/lib/python2.7/site-packages/Scrapy-1.6.0-py2.7.egg/scrapy/extensions/memusage.py", line 16, in <module>

from scrapy.mail import MailSender

File "/usr/lib/python2.7/site-packages/Scrapy-1.6.0-py2.7.egg/scrapy/mail.py", line 25, in <module>

from twisted.internet import defer, reactor, ssl

File "/usr/lib/python2.7/site-packages/Twisted-19.2.0rc1-py2.7-linux-x86_64.egg/twisted/internet/ssl.py", line 230, in <module>

from twisted.internet._sslverify import (

File "/usr/lib/python2.7/site-packages/Twisted-19.2.0rc1-py2.7-linux-x86_64.egg/twisted/internet/_sslverify.py", line 14, in <module>

from OpenSSL._util import lib as pyOpenSSLlib

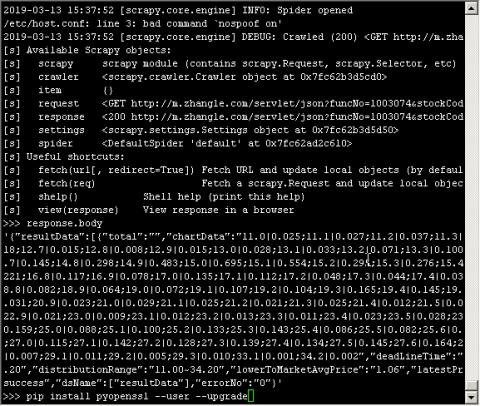

ImportError: No module named _util显然是pyOpenSSL模块引发的错误,于是试着升级该模块:

pip install pyopenssl --user --upgrade

再次运行抓取测试,终于OK:

错误4.scrapy ImportError: No module named contrib.exporter

本以为ZZKOOK自己的蜘蛛就此可以迁移到云服务器上运行了,没想到,当用如下命令跑蜘蛛zldaily时,报上述错误:

scrapy crawl zldaily -o 20190313.csv

追查原因在我的代码中导入了模块,其语句如下:

from scrapy.contrib.exporter import CsvItemExporter

检查发现,本地Ubuntu操作系统scrapy安装目录下有两个文件夹contrib、contrib_exp,而云服务器Centos操作系统的scrapy安装目录下没有它们。于是简单粗暴的将本地的这两个目录拷贝到云服务器的scrapy目录下:

cd /usr/lib/python2.7/site-packages/Scrapy-1.6.0-py2.7.egg/scrapy cp -rf /home/zzkook/contrib_exp ./ cp -rf /home/zzkook/contrib ./

再次运行本人的爬虫,成功输出到csv文件中了。

祝君顺利!

著作权归作者所有。商业转载请联系本站作者获得授权,非商业转载请注明出处 ZZKOOK。

评论

知识共享,善莫大焉

楼主么么哒

有没有再简洁一点的

正要起步,感谢分享。